One of the most important developments in the business, economic and financial arenas has been the recent emergence of Artificial Intelligence (AI). The advent of the AI era has also presented novel legal issues and has presented regulators with a host of potential challenges. In the following guest post, Alexander Hopkins takes a look at the developing efforts of a variety of governmental regulators to address the issues that AI presents, and considers the implications of these regulatory developments for the liabilities of corporate directors and officers. Alex is Of Counsel at the Saxe Doernberger & Vita, P.C. law firm. I would like to thank Alex for allowing me to publish his article as a guest post on this site.

***************************

Artificial intelligence (AI) has ushered in a new era of innovation, transforming industries, and creating unprecedented opportunities for efficiency and growth. However, with these advancements come significant challenges, particularly for directors and officers (D&Os), who are tasked with overseeing compliance, mitigating ethical risks, and managing corporate liability.

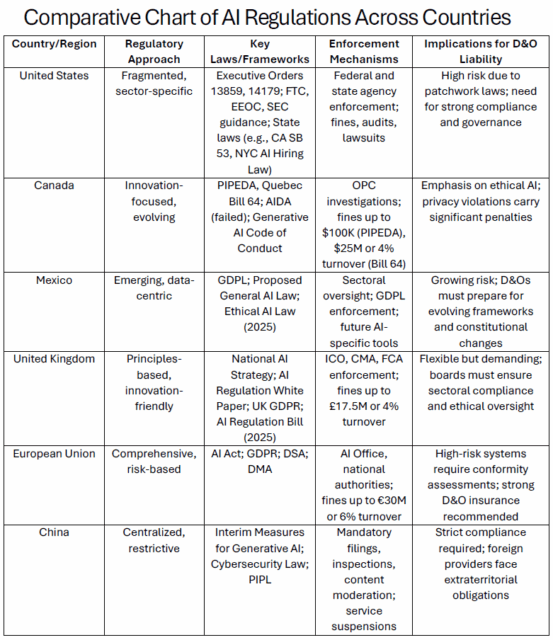

Global regulators are enacting strict AI frameworks to govern the development and deployment of these systems. As the legal and ethical landscape evolves, corporate leaders must proactively address these challenges to protect their organizations—and themselves. This article examines the global regulatory landscape—focusing on United States, Canada, Mexico, United Kingdom, the European Union, and China—and explores implications for D&O liability in navigating this complex environment.

Fiduciary Responsibilities in an Era of Global AI Regulation

Directors and officers are on the front lines of ensuring corporate compliance with rapidly evolving AI regulations. The complexity of this task is amplified by the varying approaches to regulation across major jurisdictions.

United States: Fragmented and Sector-Specific

The United States has adopted a fragmented, sector-specific approach to AI regulation, reflecting the country’s broader emphasis on innovation, market-driven solutions, and privacy protection. While no overarching federal AI legislation currently exists, a combination of executive orders, agency guidelines, and state laws governs AI development and deployment. This piecemeal framework creates both challenges and opportunities for businesses operating in the U.S.

Federal-Level AI Regulation and Guidance

1. Executive Orders and National AI Initiatives:

a. In 2019, Executive Order 13859 launched the Maintaining American Leadership in Artificial Intelligence, prioritizing innovation, workforce development, and international collaboration while addressing ethical and security concerns.

b. In January 2025, Executive Order 14110, Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence, was repealed. It was replaced by Executive Order 14179, Removing Barriers to American Leadership in Artificial Intelligence, which emphasizes deregulation and innovation over prior safety mandates.

c. The National AI Research and Development Strategic Plan outlines goals to support responsible AI development, emphasizing transparency, accountability, and inclusiveness.

2. Sector-Specific Federal Agency Actions:

a. Federal Trade Commission (FTC):

i. The FTC enforces existing consumer protection laws to regulate deceptive AI practices. Its guidance emphasizes transparency, fairness, and accountability.

ii. Businesses deploying biased, opaque, or deceptive AI systems are subject to enforcement actions under the Federal Trade Commission Act.

b. Equal Employment Opportunity Commission (EEOC):

The EEOC investigates the use of AI in hiring and employment to ensure compliance with anti-discrimination laws like Title VII of the Civil Rights Act.

c. Department of Commerce (DoC):

Through the National Institute of Standards and Technology (NIST), the DoC develops voluntary AI risk management frameworks to guide organizations in implementing responsible AI systems.

d. Office of Science and Technology Policy (OSTP):

In 2022, the OSTP issued the Blueprint for an AI Bill of Rights, which highlighted principles such as data privacy, algorithmic transparency, and non-discrimination.

e. Securities and Exchange Commission (SEC):

The SEC oversees AI-driven algorithms in financial markets, ensuring compliance with securities laws and addressing risks like market manipulation.

3. Proposed Federal Legislation:

a. Algorithmic Accountability Act:

i. Introduced in Congress, this act would require companies to assess the impact of AI systems on data privacy, bias, and discrimination.

ii. If enacted, it would mandate regular audits for high-risk AI applications.

b. The SANDBOX Act:

Introduced in 2025, proposes regulatory waivers for AI developers to encourage innovation.

4. Privacy Legislation and AI:

While the U.S. lacks a comprehensive federal privacy law, laws such as the Health Insurance Portability and Accountability Act (HIPAA) and the Children’s Online Privacy Protection Act (COPPA) impose strict data usage and protection requirements, impacting AI systems.

State-Level AI Regulation

States are increasingly stepping in to address regulatory gaps, creating a patchwork of AI-related laws:

In 2025, California passed Senate Bill 53, the Transparency in Frontier Artificial Intelligence Act, requiring large AI companies to disclose safety protocols and report critical incidents.

1. California Privacy Laws:

The California Consumer Privacy Act (CCPA) and its successor, the California Privacy Rights Act (CPRA), regulate data used in AI systems, requiring companies to provide transparency, honor consumer opt-outs, and protect sensitive personal information.

2. Illinois’ Biometric Information Privacy Act (BIPA):

a. BIPA mandates informed consent for the collection and use of biometric data, such as facial recognition, a critical component in AI applications. Noncompliance has led to multimillion-dollar lawsuits.

b. In 2025, Illinois banned AI-driven therapy services, citing safety and ethical concerns. See the Wellness and Oversight for Psychological Resources Act.

3. New York City’s AI Hiring Regulations:

Effective 2023, NYC law requires bias audits for AI hiring tools, emphasizing transparency and accountability in employment practices.

4. Pennsylvania introduced legislation, House Bill 1925:

Requiring disclosure and human oversight in AI-aided healthcare decisions.

Enforcement Mechanisms

Federal: At the federal level, enforcement mechanisms for AI-related compliance include investigations by agencies such as the Federal Trade Commission (FTC), the Equal Employment Opportunity Commission (EEOC), and the Securities and Exchange Commission (SEC). These investigations may lead to administrative fines, cease-and-desist orders, or mandated corrective action plans. For example, the FTC may pursue enforcement under the Federal Trade Commission Act for deceptive or unfair AI practices, while the EEOC may initiate actions for discriminatory outcomes in AI-driven hiring systems. Agencies may also require companies to revise algorithms, enhance transparency, or undergo third-party audits to ensure future compliance. In high-risk sectors like finance and healthcare, additional oversight may involve tailored enforcement protocols and public reporting obligations.

State:

1. California Privacy Laws (CCPA and CPRA):

a. Enforced by the California Attorney General and the newly established California Privacy Protection Agency.

b. Companies violating transparency, consumer opt-out, or data protection provisions face penalties of up to $7,500 per violation for intentional infractions.

2. Illinois Biometric Information Privacy Act (BIPA):

a. Enforced through private right of action, allowing individuals to sue companies directly for violations.

b. Penalties: $1,000 per negligent violation and $5,000 per intentional or reckless violation.

c. High-profile lawsuits have resulted in multimillion-dollar settlements, such as those involving facial recognition technology.

3. New York City AI Hiring Regulations:

a. Enforced by the NYC Department of Consumer and Worker Protection.

b. Violations of bias audit requirements or transparency rules can lead to fines of $500–$1,500 per infraction, with repeated offenses incurring escalating penalties.

Canadian AI Regulations: – Balancing Innovation with Responsibility

Canada has been a pioneer in AI development, home to research hubs and leading companies. As the technology progresses, Canadian regulators are implementing frameworks to ensure responsible AI use while fostering innovation.

The Artificial Intelligence and Data Act (AIDA)

The long-touted AIDA bill expired in January 2025 due to parliamentary prorogation. No federal AI law is currently in force.

Originally proposed under Bill C-27, AIDA sought to regulate high-impact AI systems by focusing on accountability, transparency, and harm mitigation. The act introduced obligations for developers and operators, including:

Risk Assessments: Companies must conduct regular assessments of AI systems to identify potential harm.

Impact Mitigation Plans: Organizations must develop strategies to address risks identified in assessments.

Transparency Requirements: Users must be informed when interacting with AI, especially in high-impact applications.

Noncompliance would have led to administrative monetary penalties or criminal charges for serious violations, placing significant liability on corporate leaders.

Ontario has enacted legislation requiring AI hiring disclosures, effective January 2026. See Bill 149, Working for Workers Four Act, 2024.

The Canadian federal government promotes a Generative AI Code of Conduct and launched an AI Strategy Task Force in September 2025.

Privacy Laws and AI

Canada’s federal Personal Information Protection and Electronic Documents Act (PIPEDA) and newer laws, such as Quebec’s Bill 64, regulate data privacy—a cornerstone of AI development. Key obligations include:

Consent Requirements: Explicit consent must be obtained for personal data used in AI training.

Accountability: Organizations must safeguard data and ensure its ethical use in AI systems.

Ethical Considerations

Canadian regulators emphasize ethical AI through initiatives like the Pan-Canadian AI Strategy, which promotes principles of fairness, transparency, and accountability. Boards must incorporate these principles into governance frameworks to avoid reputational damage and legal challenges.

Enforcement Mechanisms:

Again, the AIDA bill expired in January 2025 due to parliamentary prorogation. No federal AI law is currently in force.

Ontario mandates AI hiring disclosures starting January 2026.

The federal government promotes a Generative AI Code of Conduct and launched an AI Strategy Task Force in September 2025.

Privacy regulations, such as PIPEDA and Quebec’s Bill 64, integrate enforcement mechanisms to protect data privacy and ensure ethical AI development:

1. Fines and Sanctions Under PIPEDA:

a. Organizations violating data privacy provisions under PIPEDA may be subjected to investigations by the Office of the Privacy Commissioner (OPC).

b. Penalties include fines of up to $100,000 per violation.

c. The OPC also offers remediation requirements, such as data usage corrections or updated consent procedures.

2. Quebec’s Bill 64 (Modernization of Privacy):

a. Introduces stricter enforcement, including fines up to $25 million or 4% of global turnover, whichever is higher.

b. Allows private rights of action, enabling individuals to sue organizations for noncompliance with privacy regulations.

Mexican AI Regulations: While comprehensive, Still in Development

In Mexico, AI adoption is growing rapidly, particularly in industries such as manufacturing and healthcare. Although comprehensive AI-specific laws are still in development, Mexico’s regulatory landscape is currently shaped by broader technology and data privacy frameworks.

General Data Protection Law (GDPL)

Mexico’s Federal Law on the Protection of Personal Data Held by Private Parties (GDPL) governs data privacy, which directly impacts AI operations. Key provisions include:

Data Processing Limitations: Personal data used in AI must align with GDPL principles, including legality and proportionality.

Consent and Transparency: Organizations must obtain informed consent for data collection and disclose its intended use.

Data Subject Rights: Individuals can request access, correction, or deletion of their data, requiring AI systems to accommodate such requests.

In February 2025, Mexico proposed a constitutional amendment to grant Congress authority to legislate AI. A General Law on AI is anticipated within 180 days of the amendment’s approval. See Proposal for the National Agenda for Artificial Intelligence for Mexico 2024-2030 – OECD.AI.

In April 2025, the Federal Law for Ethical, Sovereign, and Inclusive AI was proposed, introducing risk-based classification and transparency requirements.

Emerging AI Frameworks

Mexican policymakers are developing AI-specific strategies to regulate the technology responsibly. Initiatives such as the National Artificial Intelligence Strategy aim to promote ethical AI use while fostering economic growth. Key priorities include:

Ethical Guidelines: Promoting fairness and inclusivity in AI systems.

Public-Private Collaboration: Encouraging partnerships to develop AI governance frameworks.

Sectoral Regulations

Industries such as finance and healthcare are subject to additional, sector-specific oversight:

Financial Institutions: AI used in financial services must comply with anti-money laundering and consumer protection laws.

A National Commission on AI in Healthcare was launched in 2025 to modernize outdated medical device regulations and attract investment.

Healthcare: AI applications must adhere to medical privacy standards and obtain regulatory approval for use in diagnostics or treatment.

Enforcement Mechanisms:

While Mexico’s AI regulation is in its nascent stages, enforcement relies heavily on the robust GDPL framework, sector-specific rules, and evolving ethical guidelines. Companies operating AI systems in Mexico must navigate these mechanisms to ensure compliance, avoid penalties, and maintain public trust. As the regulatory landscape matures, additional enforcement tools tailored to AI may emerge, further shaping Mexico’s approach to responsible AI governance.

United Kingdom: Innovation-Friendly but Accountable

The United Kingdom’s approach to AI regulation emphasizes innovation, balanced with responsible use. Its principles-based strategy offers flexibility to adapt to rapidly evolving technologies while leveraging existing regulatory frameworks.

Key Regulatory Frameworks and Strategies

1. National AI Strategy (2021)

The UK launched a 10-year plan to position itself as a global leader in AI development and deployment. Key pillars include:

a. Driving AI innovation to support economic growth.

b. Building trust through ethical and transparent AI systems.

c. Maintaining the UK’s competitiveness as a global AI investment hub.

This strategy underscores the importance of fostering a collaborative ecosystem among businesses, regulators, and academic institutions.

2. AI Regulation White Paper (2023)

The 2023 White Paper outlines the UK’s vision for regulating AI based on five core principles:

Safety, security, and robustness: Ensuring AI systems operate reliably.

Appropriate transparency and explainability: Making AI decisions interpretable for users.

Fairness: Preventing bias and ensuring equitable outcomes.

Accountability and governance: Mandating responsibility for AI decisions.

Contestability and redress: Providing mechanisms to challenge harmful AI outcomes.

Instead of creating a centralized regulatory body, the UK assigns oversight to existing regulators such as:

The Information Commissioner’s Office (ICO) for data privacy.

The Competition and Markets Authority (CMA) for market fairness.

The Financial Conduct Authority (FCA) for AI in financial services.

3. UK GDPR Compliance

AI applications that involve personal data processing must adhere to the UK GDPR. Key considerations for directors include:

a. Conducting Data Protection Impact Assessments (DPIAs) for high-risk AI systems.

b. Ensuring transparency in data collection and processing.

c. Providing individuals with rights to challenge AI-driven automated decisions.

4. Proposed AI-Specific Oversight

The UK government has signaled intentions to refine its AI regulatory approach, particularly for high-risk sectors such as healthcare, defense, and autonomous vehicles. These refinements may include enhanced auditing requirements and sector-specific standards. In March 2025, the AI Regulation Bill [HL] was reintroduced, proposing an AI authority, mandatory audits, and transparency obligations.

Enforcement Mechanisms

The UK relies on a decentralized enforcement model, empowering sectoral regulators to ensure compliance:

ICO Enforcement: Organizations found violating data protection laws face fines up to £17.5 million or 4% of annual turnover.

CMA Actions: The CMA actively monitors AI’s impact on market competition, with authority to impose penalties for anti-competitive practices.

FCA Regulation: Financial services firms must ensure AI tools comply with conduct and transparency standards to mitigate risks to customers.

European Union: Comprehensive and Proactive Approach

The EU has emerged as a global leader in AI regulation, setting the tone with its Artificial Intelligence Act (AI Act). The AI Act categorizes AI systems based on their risk profiles—unacceptable, high-risk, limited risk, and minimal risk—and imposes corresponding obligations. High-risk applications, such as those in healthcare and law enforcement, must adhere to stringent requirements, including transparency, robustness, and bias mitigation.

The EU’s AI Act builds upon its robust data protection laws, notably the General Data Protection Regulation (GDPR), which mandates stringent safeguards for personal data processing. Companies must ensure AI systems align with GDPR principles of transparency, accountability, and privacy-by-design.

The Digital Services Act (DSA) and Digital Markets Act (DMA) further address the responsibilities of digital platforms, targeting issues like misinformation and algorithmic accountability. These overlapping frameworks create a comprehensive ecosystem but require careful navigation to ensure compliance.

As of August 2, 2025, key provisions of the EU AI Act took effect, including the operationalization of the AI Office and AI Board, and enforcement mechanisms for General-Purpose AI models.

Key Features of the EU AI Act:

1. Risk-Based Regulatory Framework:

The AI Act categorizes AI systems into four risk levels:

Prohibited: AI practices deemed harmful, such as those involving subliminal manipulation or exploiting vulnerabilities of vulnerable groups.

High-Risk: Applications in critical sectors like healthcare, law enforcement, education, and employment. These systems are subject to strict compliance requirements.

Limited Risk: Systems requiring transparency obligations, such as chatbots, which must disclose their non-human nature.

Minimal Risk: Applications like AI-enhanced productivity tools, with no specific regulatory obligations.

High-risk AI systems must undergo rigorous conformity assessments, ensuring they meet requirements for transparency, accountability, and accuracy.

2. Key Compliance Obligations:

Data Governance: High-quality, bias-free training datasets are mandated to minimize discriminatory outcomes.

Accountability: Developers must establish systems to monitor and mitigate risks during the lifecycle of an AI product.

Transparency Requirements: Users and affected parties must be informed about the AI’s nature, capabilities, and limitations.

Human Oversight: High-risk AI systems must allow for meaningful human intervention to prevent unintended harm.

3. Penalties for Noncompliance:

Severe penalties include fines of up to €30 million or 6% of the global annual turnover—whichever is higher—for violations such as deploying prohibited AI systems or failing to meet high-risk system obligations.

4. Interaction with Existing Laws:

The AI Act aligns with the EU’s General Data Protection Regulation (GDPR), focusing on user privacy and data protection. Together, these frameworks ensure that AI systems comply with Europe’s stringent privacy standards.

5. Sectoral and Ethical Considerations:

The EU also integrates ethical AI considerations into broader initiatives, promoting fairness, sustainability, and societal well-being. Ethical guidelines established by the High-Level Expert Group on AI inform the AI Act’s design.

The EU pledged €1 billion to boost AI in healthcare, energy, and mobility as part of its AI in Science Strategy.

6. Support for Innovation:

The legislation includes provisions for regulatory sandboxes, allowing companies to test AI systems under controlled environments to encourage innovation while ensuring safety.

Implications for Businesses:

Compliance Costs: Companies deploying high-risk AI systems face increased costs related to documentation, assessments, and data management.

Cross-Border Operations: Multinational corporations must ensure that AI systems meet the EU’s stringent requirements, even if primarily developed or operated outside the EU.

Insurance Exposure: The heavy penalties associated with noncompliance highlight the importance of robust D&O insurance coverage.

Strategic Recommendations:

Organizations operating in the EU or targeting EU markets must:

Perform comprehensive risk assessments of AI systems.

Develop transparent documentation and maintain auditable records.

Monitor regulatory updates and emerging enforcement practices.

Enforcement Mechanisms

1. Risk-Based Oversight

a. Categorization of AI Systems: AI systems are divided into risk tiers (prohibited, high-risk, limited-risk, minimal-risk), with enforcement scaling according to risk level.

Prohibited AI: Practices such as subliminal manipulation and social scoring are banned.

High-Risk AI: Must pass stringent conformity assessments covering transparency, robustness, and data quality. Noncompliance with these requirements incurs significant penalties.

2. Administrative Penalties

Severe Fines: Violations such as deploying prohibited systems or failing to meet high-risk system obligations can result in penalties up to:

€30 million, or

6% of global annual turnover, whichever is higher.

3. Conformity Assessments

a. High-risk AI systems must undergo pre-market assessments conducted by independent notified bodies or self-assessment processes (depending on the nature of the system).

b. These evaluations ensure compliance with requirements for:

Data governance: Use of high-quality, bias-free training datasets.

Transparency: Informing users of the AI’s nature and functionality.

Human oversight: Mitigating unintended harm through meaningful human intervention.

4. GDPR Alignment

a. Data Protection Authorities (DPAs): Oversee AI-related data privacy issues, leveraging GDPR’s robust framework.

Organizations must comply with GDPR principles of transparency, data minimization, and privacy-by-design.

b. Fines under GDPR can reach up to €20 million or 4% of global annual turnover.

5. Regulatory Bodies

National Supervisory Authorities: Member states designate authorities responsible for monitoring compliance and enforcing the AI Act locally.

European AI Board: Coordinates enforcement across the EU, providing guidance and resolving cross-border issues.

6. Monitoring and Reporting Obligations

a. Developers and operators of high-risk AI systems must establish:

i. Risk management systems to monitor and address AI-related risks throughout the product lifecycle.

ii. Documentation and logs to demonstrate compliance.

7. Interaction with Sector-Specific Rules

Healthcare, Finance, and Law Enforcement: AI systems in these sectors must comply with additional EU regulations like the Medical Device Regulation (MDR) and anti-money laundering laws. Enforcement involves specialized regulatory bodies and cross-sector coordination.

8. Digital Services Act (DSA) and Digital Markets Act (DMA)

Platforms deploying AI must ensure algorithmic accountability and address issues like misinformation under the DSA.

Gatekeeper platforms under the DMA are held to high standards, including transparency about their AI-driven practices.

9. Regulatory Sandboxes

Innovation Support: Controlled testing environments allow companies to experiment with AI technologies under the supervision of regulators. This ensures safety while reducing barriers to market entry.

The EU’s AI Act positions the region as a leader in responsible AI governance, offering a template for other jurisdictions to emulate while addressing the global challenges posed by AI technologies. This regulatory clarity fosters a balanced environment where innovation can thrive without compromising societal values.

China: Centralized and Restrictive Oversight

China has implemented a structured regulatory framework for generative AI (GAI) technologies through the Interim Measures for the Management of Generative Artificial Intelligence Services (effective since August 2023). These measures aim to ensure AI development aligns with the country’s laws, ethics, and security interests.

Effective September 1, 2025, China requires explicit and implicit labeling of AI-generated content.

Three cybersecurity standards for generative AI take effect November 1, 2025.

See China GAI Measures.

Key Regulatory Highlights:

1. Scope of Application:

The measures cover GAI services offered to the public within mainland China, including extraterritorial services targeting Chinese users. Providers outside China must comply with these rules if their services affect Chinese users.

2. General Ethical and Legal Standards:

GAI services must respect Core Socialist Values, prevent discrimination, protect intellectual property, and safeguard user rights (e.g., privacy and data protection). They are also prohibited from producing content that threatens national sovereignty, security, or social stability.

3. Operational Requirements:

Training Data: Providers must ensure lawful sourcing of data, prioritize data quality and diversity, and meet tagging and accuracy standards.

Privacy Protections: Providers must obtain consent for personal data use and follow stringent data governance practices.

Content Moderation: Providers are required to label AI-generated content, promptly address illegal outputs, and cooperate with authorities.

Youth Protections: Measures must be taken to prevent AI overuse or addiction among minors.

Security Assessments: Providers with significant public influence must conduct security reviews in line with algorithmic regulations.

4. Compliance and Penalties:

Enforcement involves coordination with broader laws, such as China’s Cybersecurity Law, Data Security Law, and Personal Information Protection Law.

While fines were initially proposed in draft versions, the current framework focuses on warnings, suspension of services, and other corrective measures for violations. Providers must also explain and justify their models and training data during inspections.

These rules reflect China’s broader intent to balance AI innovation with national security and public interest considerations while promoting ethical AI use. The focus on transparency, accountability, and compliance signals a tightening regulatory environment for AI providers, both domestic and foreign.

Enforcement Mechanisms

China enforces its AI regulations, particularly those governing generative AI (GAI), through a combination of legal, technical, and administrative mechanisms. These enforcement mechanisms align with broader laws and frameworks, such as the Cybersecurity Law, Data Security Law, and Personal Information Protection Law (PIPL), as well as specific AI-focused regulations like the Interim Measures for the Management of Generative Artificial Intelligence Services.

1. Administrative Oversight

Mandatory Filings and Reviews: Providers of GAI services must register their AI models and algorithms with relevant regulatory bodies. Significant public-facing services are subject to security assessments.

Inspections and Reporting: Authorities conduct periodic inspections of AI systems, including auditing training data, algorithms, and use cases. Providers are required to report on their compliance status and address government inquiries promptly.

Algorithm Regulation: Companies must disclose algorithmic methodologies if requested and undergo scrutiny to ensure compliance with national security and ethical standards.

2. Content Moderation and Monitoring

Proactive Compliance: Providers are obligated to monitor output generated by their AI systems, ensuring they do not produce content violating laws, such as material that threatens national security or undermines public order.

Labelling and Transparency: AI-generated content must be clearly labeled to distinguish it from human-generated material, enhancing transparency for users and regulators.

3. Corrective Measures

Warnings and Suspensions: Noncompliance may result in administrative warnings, service suspensions, or temporary bans while violations are addressed.

Service Adjustments: Authorities can mandate modifications to AI systems, including retraining or deactivating certain features, if they pose risks.

4. Legal Penalties

Broader Legal Frameworks: Violations related to data protection, privacy, or cybersecurity laws may lead to penalties under the respective legislation, such as fines or operational restrictions.

Corporate Accountability: Companies are liable for the output of their AI systems and may face legal action if their services harm users or breach intellectual property rights.

5. Cross-Border Compliance

Jurisdictional Reach: Extraterritorial provisions require foreign providers offering services to Chinese users to adhere to the same standards, ensuring global compliance for entities engaging with China’s market.

International Cooperation: Coordination with global cybersecurity and data protection laws ensures that foreign providers align their practices with China’s expectations.

6. Collaboration and Feedback Mechanisms

Public Complaints: Mechanisms for users to report illegal or harmful AI outputs allow authorities to act upon public feedback.

Industry Engagement: Collaborative forums between regulators and AI providers encourage better compliance and understanding of regulatory expectations.

7. Ethical and Youth Protections

Addiction Prevention: Specific measures target the overuse of AI technologies by minors, requiring service providers to implement usage limits and safeguard young users.

In July 2025, China released its Global AI Governance Action Plan, proposing international standards and a global AI cooperation body. China’s national strategy—AI Plus—sets a goal for AI agents (such as intelligent terminals, digital assistants, and automated systems) to be adopted across 90% of key sectors by the year 2030. This includes widespread integration in areas like healthcare, manufacturing, governance, and public services. The term “penetration” refers to the extent to which these AI technologies are embedded and actively used within these sectors.

Ethical AI Development: Providers must ensure their AI aligns with China’s Core Socialist Values, promoting fairness, inclusivity, and social stability.

Implications for D&O Liability

The increasing complexity of global AI regulations creates significant risks for directors and officers. Regulatory noncompliance, algorithmic bias, and inadequate governance expose organizations—and their leaders—to financial, legal, and reputational harm.

1. Regulatory Noncompliance

D&Os are responsible for ensuring their organizations meet regulatory requirements. Failure to comply with laws like the EU’s AI Act or China’s PIPL can result in significant fines, lawsuits, and operational restrictions. For instance, GDPR violations can lead to penalties of up to 4% of global annual revenue.

To mitigate these risks, boards must:

Implement comprehensive compliance programs aligned with jurisdiction-specific laws.

Conduct regular audits of AI systems to assess compliance and identify vulnerabilities.

Appoint dedicated AI governance committees to oversee regulatory adherence.

2. Algorithmic Bias and Discrimination

AI systems, when improperly designed, can perpetuate bias, resulting in discriminatory outcomes in hiring, lending, and other sensitive areas. Such incidents may lead to lawsuits under anti-discrimination laws, such as the EEOC’s Title VII enforcement in the U.S. or GDPR mandates in the EU.

Boards must prioritize ethical AI practices by:

Conducting impact assessments to identify and mitigate biases.

Ensuring diverse datasets and inclusive design practices.

Engaging third-party audits to validate algorithmic fairness.

3. Data Privacy Violations

AI’s reliance on vast datasets amplifies privacy risks. Regulatory frameworks like GDPR, PIPL, and CCPA impose stringent data protection obligations. Noncompliance can result in hefty fines and class-action lawsuits.

To address privacy risks, D&Os should:

Establish data governance frameworks ensuring compliance with relevant laws.

Incorporate privacy-by-design principles into AI development.

Monitor cross-border data transfers to meet jurisdictional requirements.

4. Emerging Litigation Risks

Litigation related to AI systems is on the rise, including claims of negligence, product liability, and breach of fiduciary duty. Directors may face personal liability for failing to oversee AI governance adequately.

To reduce litigation exposure, boards should:

Secure robust D&O insurance policies covering AI-related risks.

Strengthen internal controls to document decision-making processes.

Stay informed about evolving litigation trends and precedents.

Implications for D&O Insurance

The rise of AI-related risks is reshaping the landscape for D&O insurance, requiring companies and insurers to adapt to this new reality.

Coverage Gaps in AI-Related Exposures

Traditional D&O insurance policies may not fully address the liabilities emerging from AI-related issues. Key gaps include:

Regulatory Fines: Many policies exclude coverage for regulatory fines, such as penalties under the EU AI Act or FTC enforcement actions.

Intentional Misconduct: If directors are found to have knowingly deployed harmful or biased AI systems, coverage for resulting lawsuits may be denied.

Cross-Border Risks: Multinational companies operating under conflicting AI regulations may face uncovered liabilities stemming from jurisdictional discrepancies.

Boards must carefully review policy terms to ensure adequate coverage for AI-related exposures, including coverage for regulatory fines where permissible by law.

Underwriting Challenges for AI Risks

Insurers are increasingly scrutinizing corporate AI governance during the underwriting process. Factors influencing premiums and coverage availability include:

The company’s track record of regulatory compliance.

The robustness of AI oversight frameworks, including auditing processes and accountability structures.

The deployment of high-risk AI systems in sensitive areas such as healthcare or finance.

By demonstrating strong governance practices, companies can secure more favorable underwriting terms and reduce premium costs.

Evolving Policy Solutions

To address the unique risks posed by AI, insurers are beginning to develop specialized endorsements or standalone AI liability products. These policies may cover:

Regulatory fines and penalties.

Class-action lawsuits stemming from algorithmic bias or data breaches.

Litigation costs associated with cross-border AI compliance failures.

Boards should engage with their insurers to explore these emerging solutions and ensure their coverage evolves alongside the risk landscape.

Proactive Strategies for Risk Mitigation

To navigate the risks associated with AI, directors must adopt proactive strategies that address compliance, governance, and ethical considerations:

Implement Comprehensive AI Governance: Establish policies that emphasize transparency, accountability, and risk mitigation, with regular audits of AI systems.

Engage Independent Auditors: Third-party audits provide objective assessments of AI compliance and ethical practices, enhancing credibility with stakeholders.

Monitor Global Regulatory Trends: Staying informed about legislative developments in key markets helps organizations anticipate and adapt to new requirements.

Collaborate with Insurers: Open communication with insurers ensures D&O policies adequately address AI-related exposures, including cross-border liabilities.

Integrate AI into Enterprise Risk Management (ERM): Treat AI-related risks as a critical component of the broader risk management framework.

Emerging Trends and the Future of AI Regulation

The regulatory landscape for AI is dynamic and multifaceted, with several emerging trends that directors must monitor:

1. International Harmonization of AI Standards

Efforts to align AI regulations across borders are gaining traction. Initiatives such as the U.S.-EU Trade and Technology Council (TTC) and OECD’s AI guidelines aim to establish common principles for ethical AI use. However, significant differences in regulatory priorities may hinder full alignment.

2. Sector-Specific AI Regulations

Industries such as healthcare, finance, and transportation are seeing the introduction of sector-specific AI regulations that address their unique risks. Boards must ensure their organizations tailor compliance strategies to meet these specialized requirements.

3. Litigation Trends and Precedents

AI-related lawsuits are shaping legal standards for algorithmic accountability and bias mitigation. Boards should monitor litigation trends to anticipate potential exposures and refine governance practices.

4. Technological Advancements

Emerging AI capabilities, such as generative AI and autonomous systems, are introducing new regulatory challenges. Issues surrounding explainability, accountability, and liability require boards to stay ahead of the technological curve.

5. Public Pressure and Corporate Social Responsibility

Stakeholders are increasingly demanding responsible AI practices, pushing organizations to align their AI strategies with broader CSR goals. Transparent communication about AI governance can enhance trust and reputation.

Opportunities Amid Challenges

While AI regulations pose challenges, they also offer opportunities for businesses to build trust and competitive advantage. Companies that prioritize ethical AI practices, transparency, and compliance can differentiate themselves in the market.

1. Competitive Differentiation

Complying with stringent frameworks like the EU’s AI Act signals a commitment to responsible AI, attracting customers and investors who value ethical practices.

2. Risk Mitigation

Proactive governance reduces the likelihood of regulatory penalties, lawsuits, and reputational damage, safeguarding long-term business viability.

3. Innovation Enablement

Regulatory sandboxes in jurisdictions like the EU and UK enable businesses to test AI innovations in controlled environments, fostering growth while managing risks.

Conclusion

Navigating global AI regulations requires a nuanced understanding of diverse legal frameworks and their implications for organizational liability. For directors and officers, the stakes are high: noncompliance, algorithmic failures, and inadequate governance can lead to significant financial and reputational harm. By adopting robust compliance strategies, prioritizing ethical practices, and leveraging opportunities within the regulatory landscape, businesses can position themselves for success in the AI-driven future.

In this rapidly evolving legal environment, proactive engagement and informed decision-making are critical for boards and leadership teams. The integration of AI into governance frameworks not only ensures compliance but also aligns innovation with ethical and societal values, fostering sustainable growth in an increasingly AI-centric world.

Note: This material is for general informational purposes only and is not legal advice. It is not designed to be comprehensive, and it may not apply to your particular facts and circumstances. Consult as needed with your own attorney or other professional advisor.